HyperLogLog Explained

Ever wonder how systems count billions of unique items without melting down? Dive into HyperLogLog, the clever algorithm that estimates massive numbers with tiny memory and surprising accuracy.

Prasad Bhamidipati

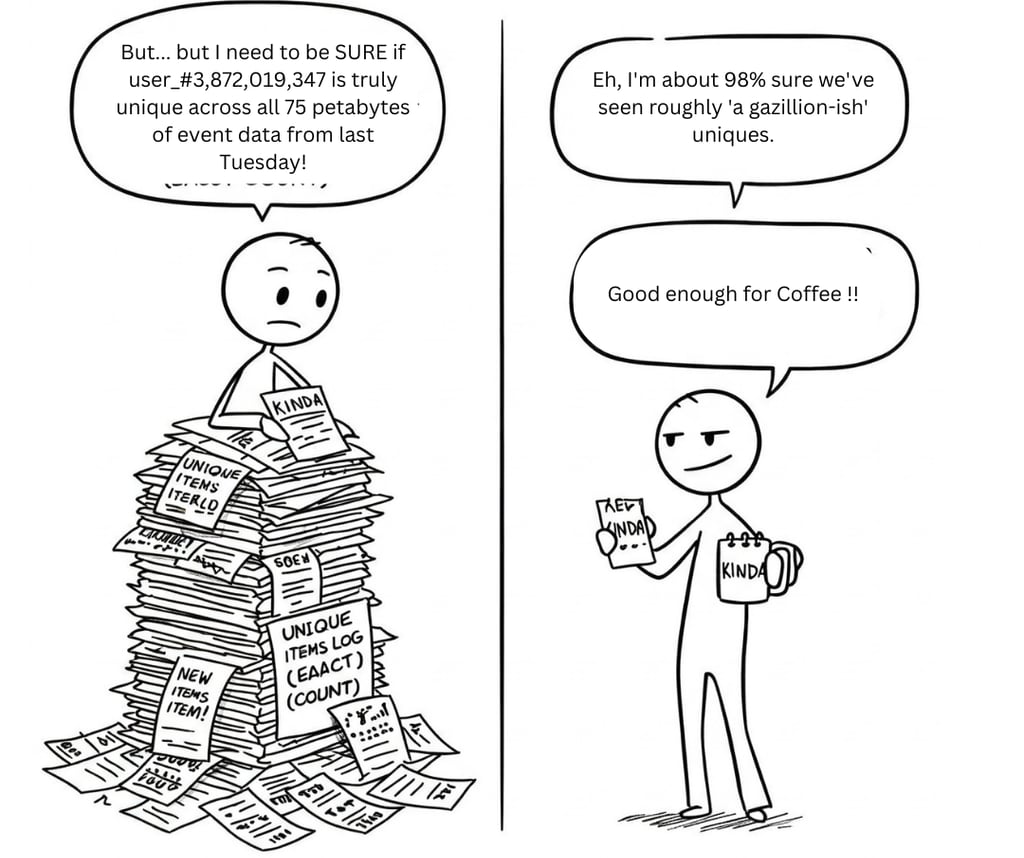

In the world of big data, seemingly simple questions can become surprisingly complex. One such question is: "How many unique items are there in this massive dataset?" Imagine trying to count the distinct visitors to a popular website, the unique words in a giant corpus of text, or the different IP addresses accessing your server. A naive approach of storing every unique item encountered quickly becomes unfeasible as datasets scale to billions or even trillions of entries. This is where probabilistic data structures like HyperLogLog (HLL) come to the rescue.

The Need: Why Counting Uniques is Hard at Scale

Consider the challenge of counting unique visitors to a website like Google or Facebook:

Massive Data: These platforms handle billions of events per day. Each event might carry an identifier (like a user ID or IP address) that you want to factor into your unique count.

Real-time Requirement: Often, you need these counts quickly, or even in real-time, for monitoring, anomaly detection, or dynamic analytics dashboards.

Memory Constraints: Storing every single unique visitor ID would require an enormous amount of memory. If each visitor ID is, say, 64 bytes, and you have a billion unique visitors, that's 64 GB of RAM just for this one count! This is often impractical for many applications running on standard hardware.

In summary, traditional methods are accurate but demand memory proportional to the number of unique items. For very large numbers of uniques, this is too costly in terms of memory, and the computational effort to manage such a large data structure also becomes a bottleneck.

Where Can HyperLogLog Be Used?

HyperLogLog shines in scenarios where an approximate count of unique items is acceptable, and memory efficiency is paramount. Some common use cases include:

Database query optimization (estimating column uniqueness).

Network traffic monitoring (counting distinct IP addresses or connections).

Web analytics (estimating unique visitors or search queries).

Real-time data streams (tracking unique events on the fly).

The key trade-off is a small, predictable error in the count for a massive reduction in memory. It can estimate cardinalities in the trillions with a typical error rate of a few percent using only a tiny amount of memory (often just kilobytes).

Building Intuition: The Surprising Power of Rare Events

Imagine you're asking a large crowd of people to flip a coin repeatedly until they get their first "Heads." Each person then tells you how many "Tails" they got in a row before that first "Heads."

If only a few people participate, the longest run of "Tails" anyone reports will probably be short (e.g., 0, 1, or 2 Tails). It would be very surprising if someone got, say, 10 Tails in a row.

If millions of people participate, it's much more likely that at least one person will experience a very long run of "Tails" (e.g., 10, 15, or even more).

The core idea: The maximum length of a "rare pattern" (like a long run of Tails) observed gives us a clue about how many unique "events" (people participating) there were. A longer maximum run suggests more participants.

HyperLogLog applies this to data:

Unique "Fingerprints": Each unique data item (like a visitor ID) is converted into a consistent, random-looking digital "fingerprint" using a process called hashing. This fingerprint is usually a sequence of 0s and 1s.

Looking for "Rare Patterns": In these binary fingerprints, a long run of leading zeros (e.g., 000001...) is statistically rare for any single item.

The Link: If we process many unique items, we're more likely to encounter an item whose fingerprint, by chance, exhibits a very long run of leading zeros. The longest run of leading zeros we observe gives us an estimate of how many unique items we've seen.

This is the basic intuition. A single such observation can be misleading due to luck, so HyperLogLog uses a more robust approach.

How HyperLogLog Works

Let's start with a very basic idea and then see how HyperLogLog refines it.

1. The Core Idea with a Single "Scorekeeper":

Imagine we just kept track of the longest run of leading zeros seen across all item fingerprints processed so far. If that maximum was, say, 5 zeros, we might guess we've seen around 25 (or 26) items.

The Problem: This is too sensitive to luck. The very first item might have a fluke long run, or we might process many items without seeing a truly representative long run. The estimate would be very unstable.

2. Improving Accuracy: Using Many "Scorekeepers"

To make the estimate reliable, HyperLogLog uses many "scorekeepers," called registers (or buckets). Think of them as many small notepads.

Preparation (Initialization):

The algorithm first sets up a fixed number of these registers, let's say m of them (e.g., 1024 or 2048). Each register is initialized to 0.

Looking at Each Item (Processing with Multiple Registers): When a new data item arrives:

Get its Fingerprint (Hashing): The item is hashed to get its binary fingerprint.

Pick a Register: A portion of this fingerprint is used to select one of the m registers. This ensures items are distributed somewhat randomly among the registers.

Find the "Rareness Score": The algorithm then looks at another part of the fingerprint and counts the number of leading zeros there. Let's call this count k.

Update the Chosen Register's Record: For the register that was picked, its current value is compared with this new k. The register always keeps the highest k value it has seen so far from any item assigned to it. So, if the new k is higher, the register is updated. Otherwise, it remains unchanged.

After all items are processed, each of the m registers holds the maximum k (longest run of leading zeros) it observed for the items mapped to it.

3. Making the Educated Guess (Estimation):

Now we have m scores (one from each register). How do we combine these into a single estimate?

Smart Averaging: Simply averaging these m scores directly isn't the best way, as some registers might have unusually high scores due to chance. HyperLogLog uses a more robust averaging technique (related to the harmonic mean).

The Concept: Each register's score (k) is transformed. This transformation gives more influence to typical scores and less influence to extremely high, rare scores. These transformed scores are then combined into a single summary indicator. This helps to smooth out the "luck" factor.

Scaling to the Final Estimate: This summary indicator isn't the final count yet. It needs to be scaled up. The algorithm uses a scaling calculation that takes into account the number of registers (m) and a special calibration constant (determined by the algorithm's designers through statistical analysis). This scaling step converts the summary indicator into the final estimate of the number of unique items.

Disclaimer: The precise formulas for the transformation, combination, and scaling involve some mathematics, but the core idea is to robustly average the observations from many registers to get a stable estimate.

4. Fine-Tuning the Guess (Corrections):

The main estimation method works well for a wide range of counts. However, for very small or extremely large numbers of unique items, small adjustments can improve accuracy:

For Very Small Counts: If the estimated count is very low and many registers are still at 0 (meaning they haven't really observed any significant patterns yet), the algorithm may use a different, simpler estimation method that is more accurate for low numbers (often by looking at the proportion of registers that are still zero).

For Extremely Large Counts: If the estimated count is so large that it's approaching the theoretical limit of unique fingerprints possible, another adjustment is made. This accounts for the increasing chance that different items might accidentally get the same fingerprint ("hash collisions"), which could otherwise make the estimate too low.

Disclaimer: These corrections also involve specific calculations, but their purpose is to make the final estimate more accurate across the entire spectrum of possible counts.

Recap of choices

Hashing: To get a consistent, random-looking binary fingerprint for each item.

Many Registers (m): This is the key to reducing the impact of "luck." It's like getting many opinions (mini-experiments) and averaging them, which is more reliable than just one.

Register Selection: To spread items fairly among the registers.

Max Leading Zeros (k) in each Register: The raw "rareness" observation from each mini-experiment.

Smart Averaging & Scaling: To combine all these observations robustly and convert them into a sensible final count.

Corrections: To polish the estimate at the extreme low and high ends.

Memory Usage

The magic of HyperLogLog is its tiny memory footprint. Because it only stores m small numbers (each representing a count of leading zeros, which doesn't need much space, e.g., 5-6 bits per register), the total memory used is very small. For instance, with 2048 registers, each needing perhaps 6 bits, the total is around 2048×6 bits≈1.5 kilobytes. This memory size is fixed and does not depend on the number of items you're counting, even if it's billions or trillions!

Closing Thoughts

HyperLogLog is a powerful example of a probabilistic algorithm. It cleverly trades a small, controllable amount of error for enormous gains in memory efficiency and often speed. It allows us to ask "how many unique things?" about datasets so large that exact counting would be impossible or impractical.

Key Takeaways:

It's an Estimate: HyperLogLog provides a very good approximation, not an exact count. The accuracy depends on the number of registers used (more registers = higher accuracy = slightly more memory).

Incredibly Space-Efficient: Its main advantage is its tiny, fixed memory usage.

Fast: Processing each item is very quick.

Mergeable: Sketches (the state of the registers) from different datasets can be easily combined to estimate the unique count of their union, which is very useful in distributed systems.

Understanding the core intuition—that observing rarer patterns becomes more likely as you encounter more unique items, and that averaging many such observations leads to stability—is key to appreciating why HyperLogLog is such a widely used tool in the world of big data.

Expert articles on AI and enterprise architecture.

Connect

prasadbhamidi@gmail.com

+91- 9686800599

© 2024. All rights reserved.