The Hidden Economics of AI Agents: KV-Cache Optimization That Saves 90% on Costs

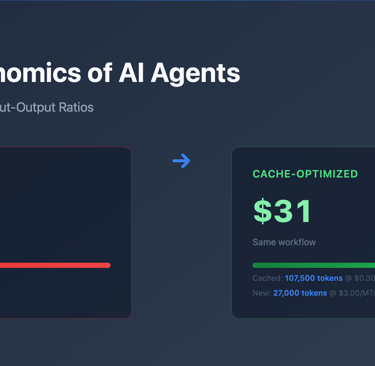

AI agent systems exhibit extreme input-to-output token ratios (100:1+) that create massive inference costs when KV-cache optimization fails. Most teams discover this architectural requirement too late, after deploying systems that accidentally defeat caching through common design patterns. This technical guide covers the specific architectural decisions that can reduce agent inference costs by 70-90%.

Prasad Bhamidpati

The Agent Cost Problem

Most teams building AI agents focus on model selection and prompt engineering. They miss the bigger cost driver: KV-cache efficiency. The difference between a cache-hostile and cache-optimized agent architecture is often 10x in inference costs.

Agents have fundamentally different cost patterns than chatbots. A typical chat interaction involves a user query of maybe 50-200 tokens and a model response of similar length. The input-to-output ratio stays roughly balanced at 1:1 to 5:1.

Agent systems work differently. They maintain large contexts containing system prompts, comprehensive tool definitions, and growing action-observation histories. A single agent iteration might process 8,000 input tokens but output only 80 tokens for a structured tool call. The agent reads extensive context to decide on a small, specific action.

This creates extreme input-to-output ratios of 100:1 or higher. Each iteration appends new action-observation pairs to the growing context, but the output remains consistently short. The agent spends most of its compute budget processing context rather than generating responses. This pattern makes caching efficiency critical for cost control.

The economics are clear:

OpenAI: $3/MTok uncached vs $0.30/MTok cached

Anthropic: $15/MTok vs $1.50/MTok

Self-hosted: Full recomputation vs memory-efficient serving

Most agent architectures accidentally defeat caching optimization. Teams discover this after deploying to production when inference bills spike unexpectedly.

1. Agent Context Patterns vs Everything Else

Agents follow different context patterns than other LLM applications. Understanding these patterns is necessary for cost-effective agent systems.

A typical agent context structure:

System prompt (500 tokens) + Tool definitions (2,000 tokens) +

Action₁ (50 tokens) → Observation₁ (1,200 tokens) +

Action₂ (45 tokens) → Observation₂ (800 tokens) +

...continues growing...

The cost implications compound quickly. Consider a 10-step workflow:

- Iteration 1: 2,750 total tokens

- Iteration 5: 2,500 base + 5,475 history = 7,975 tokens

- Iteration 10: 2,500 base + 10,950 history = 13,450 tokens

Total pre-filling across all iterations: 2,750 + 5,975 + 7,975 + 9,975 + 11,975 + 13,450 = exponential growth in compute.

Without caching, this becomes expensive fast. Agents naturally create cacheable contexts if architected correctly. The system prompt and tool definitions rarely change between iterations. Most of the growing context consists of deterministic action-observation pairs that can be cached incrementally.

2. KV-Cache Mechanics and Failure Modes

KV-caching works through exact prefix matching. The cache stores key-value pairs from transformer attention computations for reuse when the same token sequence appears again. Even single-token differences invalidate cached content from that point forward.

This creates fragility. Consider these contexts:

Cached: "The current time is 2024-01-15 14:30:00..."

New: "The current time is 2024-01-15 14:30:01..."

The one-second difference invalidates the entire cache.

Autoregressive language models compute each token based on all previous tokens. This dependency chain means cache invalidation cascades. If token N differs, tokens N+1, N+2, etc. must be recomputed regardless of whether their content is identical.

Provider implementations vary significantly. OpenAI enables automatic prefix caching in chat completions. Anthropic offers explicit prompt caching with manual cache points. Self-hosted systems like vLLM require configuration and request routing for cache effectiveness.

The cache matching is literal. JSON serialization order matters. Floating point precision matters. Unicode normalization matters. Any source of non-determinism breaks caching.

3. Common Architecture Anti-Patterns

Several design patterns consistently break KV-cache efficiency. These often seem reasonable during development but create permanent cost overhead.

Timestamp Precision Issues

Including high-precision timestamps in system prompts kills caching. Many teams add timestamps so models can reference current time. But millisecond or second-level precision means every request generates a unique context prefix.

Cached: "The current time is 2024-01-15 14:30:00..."

New: "The current time is 2024-01-15 14:30:01..."

JSON Serialization Non-Determinism

Programming languages don't guarantee object property ordering. JavaScript, Python dictionaries, and similar structures can serialize the same logical content in different orders.

// Same object, different serialization

{"tool": "search", "query": "data", "limit": 10}

{"limit": 10, "tool": "search", "query": "data"}

This creates cache misses for logically identical content. The solution requires explicit key ordering in all serialization.

Dynamic Tool Management

Modifying tool definitions mid-workflow breaks caching. Tool definitions typically appear early in the context after the system prompt. Changes invalidate all subsequent cached content.

Teams often implement tool selection by adding/removing tools from the context based on workflow state. This seems logical but destroys cache efficiency. Each tool set change forces full recomputation.

UUID and Session Management

Request correlation IDs, session UUIDs, and similar identifiers create uniqueness when placed in cacheable content. These identifiers serve legitimate purposes but should not appear in cached context regions.

4. Cache-Optimized Architecture Patterns

Effective cache optimization requires specific architectural patterns. These patterns maintain functionality while maximizing cache reuse.

Ordered Append-Only History Pattern

Never modify existing context content. Never reorder previous entries. Only append new action-observation pairs in strict chronological sequence. This preserves cache validity for all previous content.

The context structure maintains rigid ordering:

[Immutable system prompt] →

[Static tool definitions] →

[Action₁ → Observation₁ → Action₂ → Observation₂ → ... → ActionN]

Each iteration appends exactly one action-observation pair to the end. The ordering never changes. Previous actions are never moved, grouped, or reorganized. This strict sequential pattern is essential for cache efficiency.

Many systems break this pattern by attempting to optimize context organization. Some try to group similar actions together. Others reorder entries by priority or relevance. These modifications destroy caching even when the logical content remains identical.

The ordered append-only pattern means accepting context that may not be optimally organized for human readability. The trade-off favors cache efficiency over context aesthetics.

Deterministic Serialization

All content serialization must be deterministic and consistent. This requires:

- Explicit key ordering for JSON objects

- Standardized number formatting (avoid 1.0 vs 1 variations)

- Unicode normalization (NFC) for text content

- Consistent whitespace and formatting rules

Tool Control via Logit Masking

Instead of removing unavailable tools from the context, control tool selection through constrained decoding. Tools remain in the context but logit masking prevents their selection when inappropriate.

# Pseudo-code for tool masking

if tool_available_in_current_state(tool_name):

allow_token_generation(tool_tokens)

else:

mask_token_logits(tool_tokens, negative_infinity)

This approach keeps tool definitions static while controlling behavior through the decoding process.

Strategic Cache Boundaries

Some providers support manual cache boundary placement. Position boundaries after stable content (system prompt + tools) and before variable content (action history).

Cache boundary placement affects cost-effectiveness. Boundaries should encompass maximum stable content while accounting for cache expiration policies.

5. Implementation and Performance Considerations

Implementing cache-optimized architectures requires changes throughout the system. These changes affect development practices, operational monitoring, and provider selection.

Development Impact

All serialization code must guarantee deterministic output. This affects JSON libraries, object iteration, and data structure handling. Development teams need coding standards that preserve cache efficiency.

State management becomes append-only. Modifying previous actions or observations breaks the optimization. Systems must track agent state without mutating context history.

Testing requires cache hit rate measurement. Traditional functional testing doesn't reveal cache efficiency problems. Teams need instrumentation to track caching performance.

Operational Monitoring

Cache hit rates become primary performance indicators. Teams should target 80%+ hit rates for mature agent workflows. Lower rates indicate architecture problems or workflow complexity issues.

Cost per agent step provides economic measurement. This metric captures the combined effect of context efficiency and cache performance.

Context growth rates reveal workflow efficiency. Linear growth is expected, but super-linear growth suggests architecture problems.

Provider Considerations

Different providers offer different caching capabilities. OpenAI provides automatic prefix caching. Anthropic requires explicit prompt caching configuration. Self-hosted systems need prefix caching enabled and proper request routing.

Multi-provider strategies require architecture that works across different caching implementations. Some optimizations work better with specific providers.

Performance Results

Well-implemented cache optimization typically delivers:

- 70-90% cost reduction compared to unoptimized systems

- 5-10x improvement in time-to-first-token

- Better system throughput due to reduced compute requirements

The optimization compounds with workflow complexity. Simple agents see moderate improvements. Complex multi-step agents see dramatic cost reductions.

Conclusion

KV-cache optimization represents a fundamental architectural requirement for agent systems, not an optional performance enhancement. The difference between cache-optimized and cache-hostile designs directly impacts unit economics and system scalability.

Most teams discover cache optimization too late, after deploying systems with embedded inefficiencies. Retrofitting cache optimization into existing architectures is significantly more expensive than designing for caching from the start.

The technical patterns are well-understood: append-only contexts, deterministic serialization, static tool definitions, and strategic cache boundaries. The implementation requires discipline in development practices and operational monitoring.

Teams that master cache-optimized agent architecture gain significant competitive advantages through lower operational costs and better system performance. These advantages compound as agent complexity and usage scale increase.

Expert articles on AI and enterprise architecture.

Connect

prasadbhamidi@gmail.com

+91- 9686800599

© 2024. All rights reserved.